Congratulations on Dr. Lia Haddadian

December 15, 2025

Our graduate research associate, Lia Haddadian, earned her PhD on December 15, 2025. Congratulations to Dr. Haddadian on this outstanding achievement. Her work was highlighted as one of the Outstanding Graduate accomplishments this year. More details are available in the college feature on her work at the following link:

News Post

Below is a brief overview of Dr. Haddadian’s dissertation.

Title: Enhancing Argumentative Writing in EFL Education Through AI Powered Personalized Learning

Brief Abstract: This dissertation advances AI powered, personalized writing support through a design based research program integrating synthesis, development, and validation. The first study systematically reviewed 18 empirical studies on automated writing evaluation in adult EFL argumentative writing, identifying design principles and limitations that inform pedagogically grounded AWE systems. Building on these insights, the second study developed and validated AI based essay scoring models, demonstrating strong alignment between transformer based models and human ratings, with emerging but less mature performance from generative AI approaches. Together, the studies establish a validated and ethically grounded foundation for scalable, explainable, and pedagogically informed AI supported writing environments.

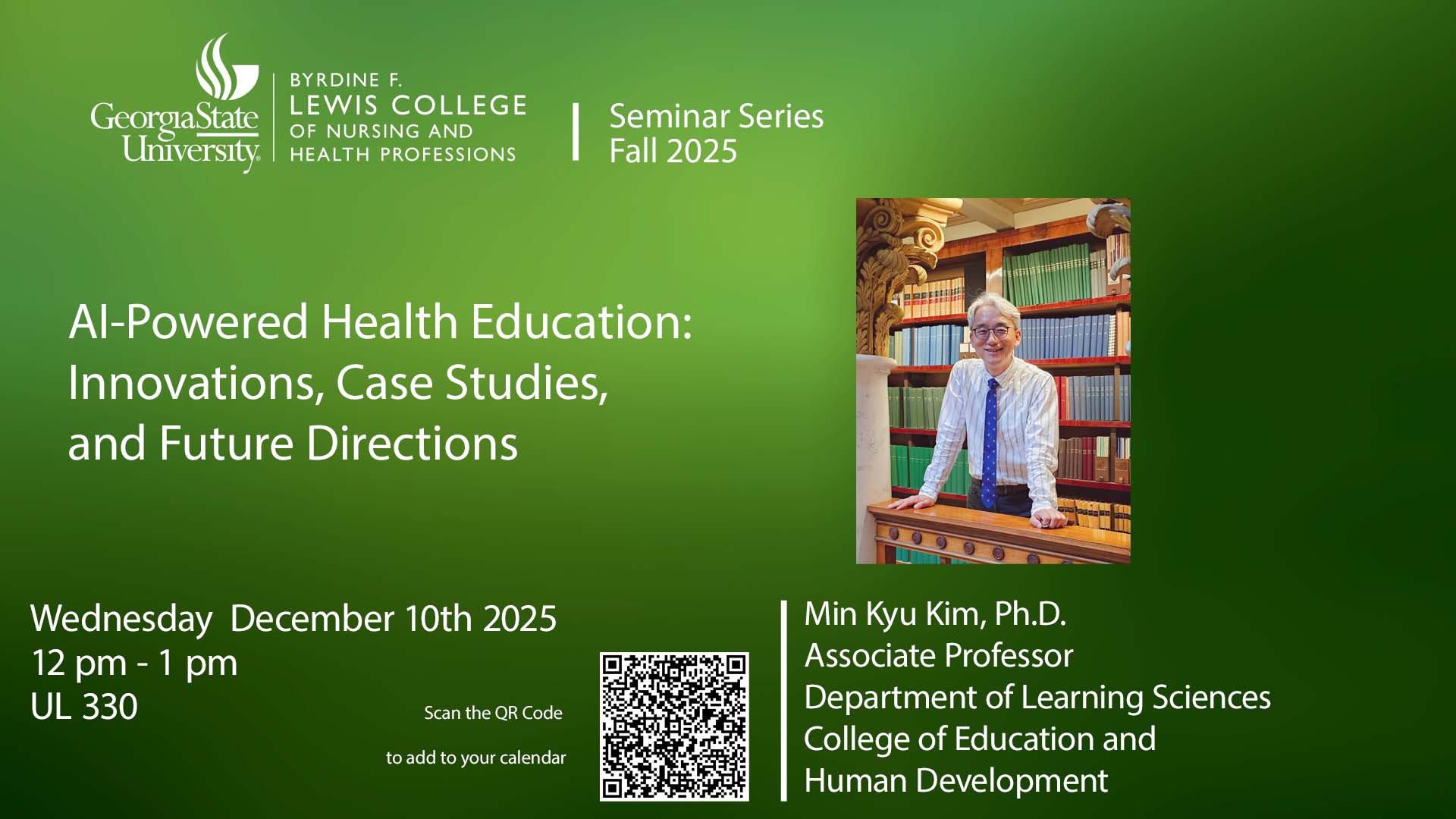

Dr. Kim was invited to present a talk for the Lewis College Seminar Series.

December 10, 2025

Dr. Kim presented an invited talk for the Lewis College Seminar Series on Wednesday, December 12, 2025, from 12:00 PM to 1:00 PM. The seminar consisted of a 45 minute presentation followed by 15 minutes of Q and A.

The topic of the presentation was “AI Powered Health Education: Innovations, Case Studies, and Future Directions.” In the talk, Dr. Kim introduced multiple projects developed by his research team in collaboration with faculty members and staff in the Lewis College School of Nursing. These projects include the deployment of SMART and Jill Watson in undergraduate and graduate level nursing courses over the past year. As a case study, Dr. Kim shared recent research findings from the Medical Surgical I course in Spring 2025 and outlined plans for further analyses using scaled up data from multiple nursing courses to examine the combined impacts of AI agents in authentic instructional settings.

In addition, Dr. Kim demonstrated ongoing developmental projects, including AI Powered Multimodal Instruction for Growth (AIM HIGH), an AI driven instructional platform, and AIM HIGH SIM, an AI augmented nursing simulation environment. The presentation also highlighted advanced multimodal representations and analytics features currently under development in his lab to further advance AIM HIGH in nursing education.

Dr. Kim joined the AI and Language Education Panel at World Languages Day 2025.

October 17, 2025

Dr. Kim participated in the Center for Urban Language Teaching and Research (CULTR) World Languages Day 2025 Panel at Georgia State University (https://cultr.gsu.edu/wld/). The panel discussion, held virtually on the morning of October 17, 2025, focused on the theme of AI and language education.

This year, CULTR’s World Languages Day featured a pre-recorded panel designed for audiences interested in exploring the intersection of language and artificial intelligence. With opening remarks by Dr. Hakyoon Lee and moderation by Dr. Tim Jansa, Dr. Kim, along with two other panelists, shared insights on how language skills and global competency have shaped their careers while addressing questions about AI usage and development.

Dr. Min Kyu Kim

With a Ph.D. in Learning, Design, and Technology from the University of Georgia, Dr. Kim is an associate professor within GSU’s Department of Learning Sciences. Founding the AI2 Research Laboratory, Dr. Kim has many years of experience pursuing research regarding AI-driven personalized learning, technology-enhanced assessment, and learner engagement in digital environments in order to create equitable learning environments.

Dr. Marta Galindo

Currently, the director of the Center for International Resources and Collaborative Language Engagement, Dr. Galindo, research highlights language learning technology usage and AI.

Darrah DeVane

Graduating with a Master of Arts in Applied Linguistics from GSU in May 2025, Darrah DeVane is currently a Learning Designer at Duolingo, utilizing her language proficiency of Korean and English to create and design language courses.

Jinho presented SMART research at the NSF AI ALOE National Virtual Research Showcase.

October 16, 2025

Our graduate researcher associate, Jinho Kim, presented SMART research at the NSF AI ALOE National Virtual Research Showcase. The showcase, held from 1–4 PM (EDT) on October 16, 2025, marked NSF AI ALOE’s first national event. This interactive online gathering brought together more than one hundred researchers, educators, and innovators to explore cutting-edge advancements in AI for learning and education. You can watch the video recording ot the virtual showcase by clicking the image.