Congratulations on Dr. Lia Haddadian 🔗

December 15, 2025

Our graduate research associate, Lia Haddadian, earned her PhD on December 15, 2025. Congratulations to Dr. Haddadian on this outstanding achievement. Her work was highlighted as one of the Outstanding Graduate accomplishments this year. More details are available in the college feature on her work at the following link:

News Post

Below is a brief overview of Dr. Haddadian’s dissertation.

Title: Enhancing Argumentative Writing in EFL Education Through AI Powered Personalized Learning

Brief Abstract: This dissertation advances AI powered, personalized writing support through a design based research program integrating synthesis, development, and validation. The first study systematically reviewed 18 empirical studies on automated writing evaluation in adult EFL argumentative writing, identifying design principles and limitations that inform pedagogically grounded AWE systems. Building on these insights, the second study developed and validated AI based essay scoring models, demonstrating strong alignment between transformer based models and human ratings, with emerging but less mature performance from generative AI approaches. Together, the studies establish a validated and ethically grounded foundation for scalable, explainable, and pedagogically informed AI supported writing environments.

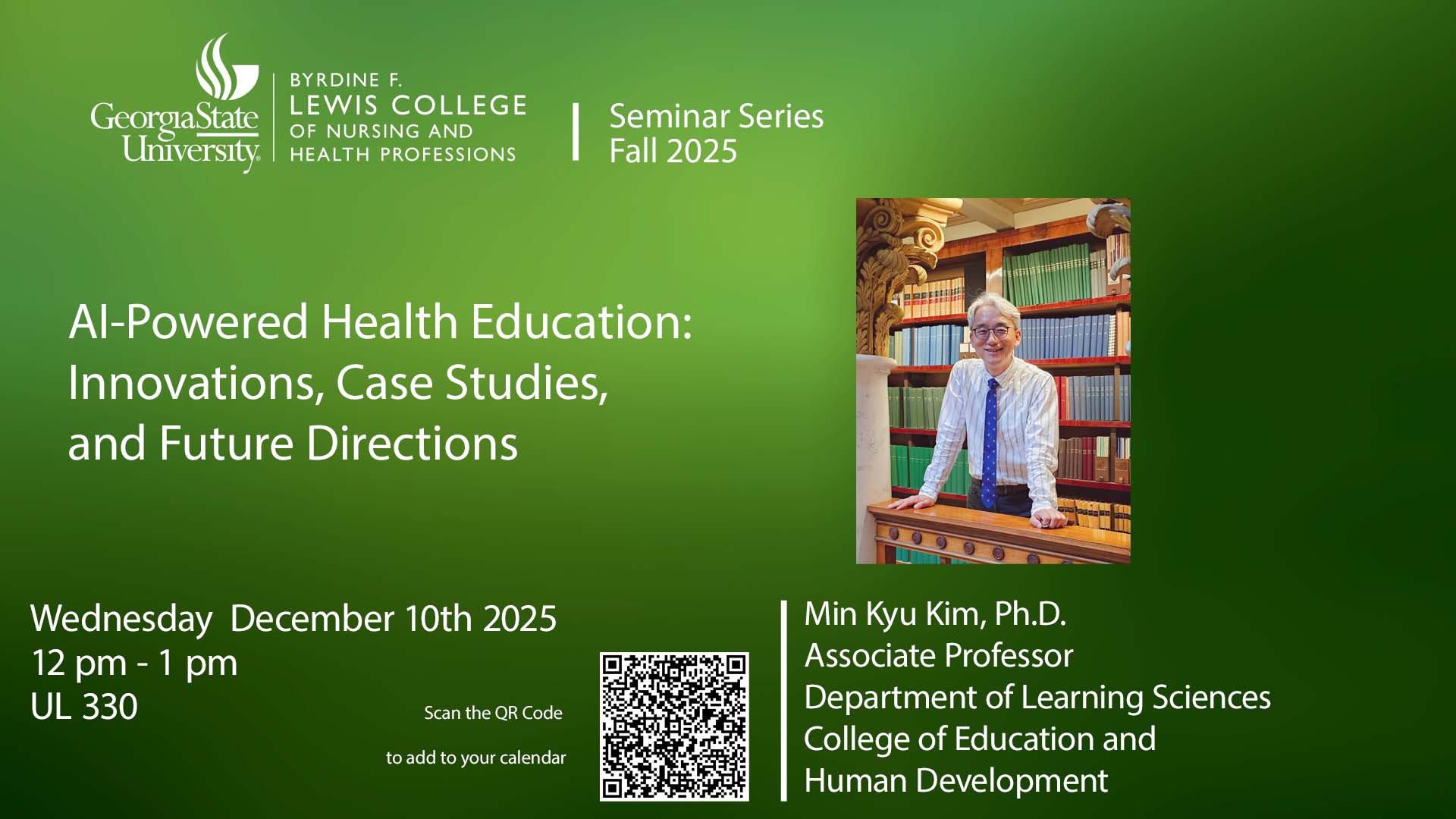

Dr. Kim was invited to present a talk for the Lewis College Seminar Series. 🔗

December 10, 2025

Dr. Kim presented an invited talk for the Lewis College Seminar Series on Wednesday, December 12, 2025, from 12:00 PM to 1:00 PM. The seminar consisted of a 45 minute presentation followed by 15 minutes of Q and A.

The topic of the presentation was “AI Powered Health Education: Innovations, Case Studies, and Future Directions.” In the talk, Dr. Kim introduced multiple projects developed by his research team in collaboration with faculty members and staff in the Lewis College School of Nursing. These projects include the deployment of SMART and Jill Watson in undergraduate and graduate level nursing courses over the past year. As a case study, Dr. Kim shared recent research findings from the Medical Surgical I course in Spring 2025 and outlined plans for further analyses using scaled up data from multiple nursing courses to examine the combined impacts of AI agents in authentic instructional settings.

In addition, Dr. Kim demonstrated ongoing developmental projects, including AI Powered Multimodal Instruction for Growth (AIM HIGH), an AI driven instructional platform, and AIM HIGH SIM, an AI augmented nursing simulation environment. The presentation also highlighted advanced multimodal representations and analytics features currently under development in his lab to further advance AIM HIGH in nursing education.

Dr. Kim joined the AI and Language Education Panel at World Languages Day 2025. 🔗

October 17, 2025

Dr. Kim participated in the Center for Urban Language Teaching and Research (CULTR) World Languages Day 2025 Panel at Georgia State University (https://cultr.gsu.edu/wld/). The panel discussion, held virtually on the morning of October 17, 2025, focused on the theme of AI and language education.

This year, CULTR’s World Languages Day featured a pre-recorded panel designed for audiences interested in exploring the intersection of language and artificial intelligence. With opening remarks by Dr. Hakyoon Lee and moderation by Dr. Tim Jansa, Dr. Kim, along with two other panelists, shared insights on how language skills and global competency have shaped their careers while addressing questions about AI usage and development.

Dr. Min Kyu Kim

With a Ph.D. in Learning, Design, and Technology from the University of Georgia, Dr. Kim is an associate professor within GSU’s Department of Learning Sciences. Founding the AI2 Research Laboratory, Dr. Kim has many years of experience pursuing research regarding AI-driven personalized learning, technology-enhanced assessment, and learner engagement in digital environments in order to create equitable learning environments.

Dr. Marta Galindo

Currently, the director of the Center for International Resources and Collaborative Language Engagement, Dr. Galindo, research highlights language learning technology usage and AI.

Darrah DeVane

Graduating with a Master of Arts in Applied Linguistics from GSU in May 2025, Darrah DeVane is currently a Learning Designer at Duolingo, utilizing her language proficiency of Korean and English to create and design language courses.

Jinho presented SMART research at the NSF AI ALOE National Virtual Research Showcase. 🔗

October 16, 2025

Our graduate researcher associate, Jinho Kim, presented SMART research at the NSF AI ALOE National Virtual Research Showcase. The showcase, held from 1–4 PM (EDT) on October 16, 2025, marked NSF AI ALOE’s first national event. This interactive online gathering brought together more than one hundred researchers, educators, and innovators to explore cutting-edge advancements in AI for learning and education. You can watch the video recording ot the virtual showcase by clicking the image.

Lia published an article in International Journal of Educational Technology in Higher Education 🔗

October 1, 2025

Congratulations on Lia’s recent publication! Lia has been actively collaborating with external scholars and building her own research network. This publication is a wonderful outcome of those efforts and reflects her growing scholarly engagement.

Noroozi, O., Haddadian, G., Gao, X., Schunn, C., Alqassab, M., & Banihashem, S. K. (2025). The value of GenAI for peer feedback provision: Student perceptions and impacts. International Journal of Educational Technology in Higher Education, 22(61). https://doi.org/10.1186/s41239-025-00558-6

Abstract: Generative Artificial Intelligence (GenAI) has sparked a global debate on its potential as a feedback source for students, yet research in this area remains limited. This study explores students’ use of GenAI during peer feedback provision. Fifty-four graduate students enrolled in a master’s course in the food science domain at a Dutch university received instruction on the effective and ethical use of GenAI. They then wrote an argumentative essay, provided feedback to peers, and revised their essays. Finally, students completed an online questionnaire regarding their perceptions and use of GenAI for peer feedback provision. Descriptive analyses were applied to survey data and comment data were coded quantitatively for the presence of comment features. The results revealed that just over half of the students chose not to use GenAI for peer feedback provision, primarily because they believed they would learn more by completing the task independently. The remaining students used GenAI to improve both high-level and low-level aspects of their feedback, and most of these students found GenAI to be moderately helpful for peer feedback provision. In terms of its impact on the peer feedback content, students who used GenAI provided more suggestions for high-level issues and offered less mitigating praise for low-level issues compared to those who did not use GenAI for peer feedback provision. These results offer valuable insights for the design and adoption of GenAI tools to enhance peer feedback practices.

A workshop proposal has been accepted for the Conference of the Southern Nursing Research Society. 🔗

August 18, 2025

The proposal we submitted in collaboration with Dr. Kyungeh An, Assistant Dean for Research in the School of Nursing, and other nursing faculty—Optimization of AI Integration for Nursing Education & Research—has been accepted for a pre-conference workshop at the 40th Annual Conference of the Southern Nursing Research Society (SNRS), to be held February 19–20, 2026, at the Renaissance Austin Hotel in Austin, Texas. This is a highly competitive opportunity, with only one or two proposals selected from approximately 30 applications. Notably, it will also mark the first jointly organized event between our team and the School of Nursing.

Jinho, Seora, and I will be joining the workshop in February 2026. Hooray!

Dr. Min Kyu Kim’s Invited Talk at the Science and Cyberinfrastructure for Discovery (SCD) Symposium 🔗

September 10, 2025

Dr. Min Kyu Kim delivered an invited talk at Georgia State University’s Science and Cyberinfrastructure for Discovery (SCD) Symposium on September 10, 2025, at the Student Center East - State Ballroom. Hosted by Advanced Research Computing Technology, Innovation & Collaboration (ARCTIC), the SCD Symposium fosters collaboration across disciplines through the exchange of research and ideas.

Dr. Kim’s presentation, AI for Education, highlighted recent research and development in artificial intelligence for educational contexts. He introduced the five NSF AI Institutes in Education, showcasing innovative approaches to AI research, and placed particular emphasis on projects such as SMART, AIM-HIGH, and AI-NSF, while also sharing other AI-based initiatives currently underway in his lab.

Link: https://arctic.gsu.edu/training/scd/

AI-ALOE Mini Retreat 🔗

September 5, 2025

AI-ALOE’s mini retreat was held virtually on September 5, with more than 40 participants joining for a half-day of presentations and discussions. The retreat served as a valuable opportunity for all teams to share updates and have discussions about their Specific, Measurable, Achievable, Relevant, and Time-bound (SMART) goals.

Our SMART team (Dr. Min Kyu Kim, Jinho Kim, Yoojin Bae) participated in the retreat. Dr. Kim presented the team’s SMART plan, titled SMART Squared, which outlined our SMART technology implementation and data analysis priorities, as well as the confluence of AI-ALOE technologies in nursing courses at GSU.

Read more about the AI-ALOE mini retreat here: https://aialoe.org/ai-aloe-hosts-mini-retreat-to-advance-research-and-collaboration/

Jinho Kim and Yoojin Bae Elected to the 2025–2026 Board of the ILSSA. 🔗

September 1, 2025

We are excited to share that Jinho Kim and Yoojin Bae have been elected to the 2025–2026 board of the International Learning Sciences Student Association (ILSSA).

ILSSA is part of the International Society of the Learning Sciences (ISLS) and represents undergraduate students, graduate students, and postdoctoral researchers in the field of learning sciences.

Our graduate associate, Jinho Kim, has been elected Secretary, while Yoojin Bae will serve as the Asia-Pacific Regional Representative. In addition, both will represent ILSSA in other ISLS committees: Jinho Kim on the Technology Committee and the Hybrid Engagement Sub-Committee, and Yoojin Bae on the Membership Committee.

Get to know more about ILSSA here: https://sites.google.com/view/ilssa/meet-the-board

Jinho Kim Presents on Education with Generative AI at KENTECH 🔗

August 20, 2025

Our graduate associate, Jinho Kim, gave a presentation on Education with Generative AI to students at the Korea Institute of Energy Technology (KENTECH). The talk took place during the July Residential College (RC) Master’s Tea Time, where she introduced projects she is involved in, including AI-ALOE and AI-Powered Nursing Education.

Afterwards, Jinho engaged in discussions with KENTECH students on how they are experiencing and using generative AI. They also had conversations about their research interests, perspectives, and pathways for pursuing graduate studies.